Over the past decade, we are witnessing a boom in machine learning frameworks and libraries. Everything from social media and OTT platforms to voice cloning and virtual assistants are leveraging artificial neural networks and machine learning models to become better at understanding human perception. This chapter will give you a quick walkthrough of what perceptron is and its types.

What is Perceptron?

Perceptron is designed models of biological neurons to help develop an artificial neural network (ANN). The early algorithms of binary classifiers used in supervised learning are also termed perceptron. In other words, it is a unit that helps build an ANN system. An American psychologist, Frank Rosenblatt, came up with the term perceptron in 1957 at Cornell Aeronautical Laboratory, funded by the United States Office of Naval Research. Rosenblatt got heavily encouraged by experiencing the working of a biological neuron and how it can learn. The perceptron Rosenblatt proposed consists of one or more input data that are linearly separable, a processor to process the outcome, and only one output from the processed input.

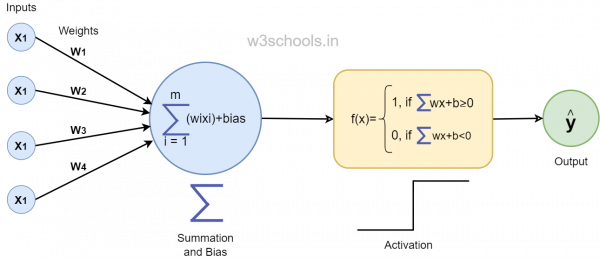

A perceptron consists of different parts. These are:

- Input Values or Single Input Layer: These are the first layer of inputs where the neural network uses these artificial input neurons to make the perceptron. It takes the initial data into the neural system for further computation. Here the ‘x1’es are the input layers.

- Weights: It denotes the proportions or stability of an input's connection between units. When the weight value from node 1 to node 2 goes high in quantity, neuron one has a more substantial influence over the other neuron.

- Bias: This value acts as an intercept included in a linear equation. This auxiliary parameter modifies the output in conjunction with the weighted sum of the input within the other neuron.

- Net Sum: It is the complete summation of the input, its associated weight, bias, and the operation performed to get the final sum.

- Activation Function: The activation function is a mathematical function that determines whether the artificial neuron in the neural network can get activated or not. It helps in calculating the weighted sum & further adds bias with it for giving the result.

Types of Perceptron

The pattern through which nodes get connected, the total number of layers and the level of nodes generated within the inputs, and the output by connecting the neurons through layers define the architecture of the artificial neural network.

- Single-Layer Perceptron

- Multi-Layer Perceptron

Let's discuss these two separately.

Single-Layer Perceptron

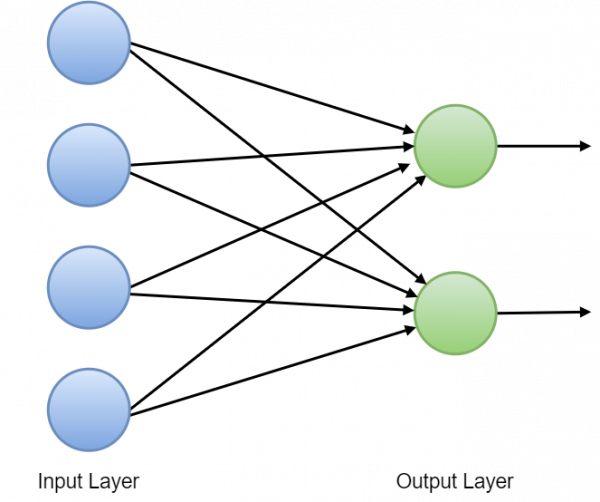

Single-layer perceptron is an artificial neural net that comprises one layer for computation. In such a perceptron type, the neural network performs the computation directly from the input layer to the output. Such a perceptron does not contain any hidden layer. In such a type of perceptron, the input nodes are directly connected to the final layer. It is easy for TensorFlow to run such algorithms. A node in the next layer carries the weighted sum of various other inputs.

This is what a single layer perceptron will look like:

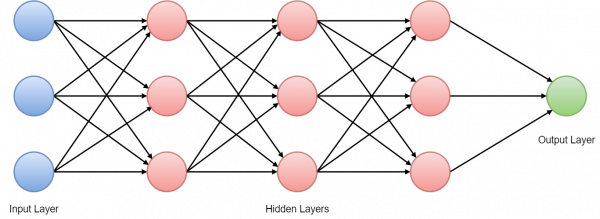

Multi-layer Perceptron

Multi-layer perceptron is a complicated artificial neural net that originates from multiple layers of perceptron, where one is feed-forward, generating a collection of outputs from a collection of inputs. In other words, a multi-layer perceptron is a directed graph within a neural network that helps in connecting multiple layers to transmit a processed signal that goes in one direction. The multi-layer perceptron network comprises an input layer, an output layer, and various hidden layers. Each hidden layer consists of multiple perceptrons termed Hidden layers or Hidden units. The supervised learning models trained through TensorFlow use multi-layer perceptron. This is what a multi-layer perceptron will look like:

All modern complex neural network algorithms and machine learning models use multi-layer perceptron.